Towards AI Transparency with Model Factsheets

- Bruno Ohana

- Apr 5, 2022

- 2 min read

Updated: Oct 12, 2023

At biologit we’re making transparency an integral part of our development process, and we’re excited to release the first fact sheet for biologit MLM-AI’s Suspected Adverse Event model for assisting medical literature monitoring workflows in pharmacovigilance, veterinary and medical device surveillance.

Model Factsheets were proposed by (Arnold et al, 2019) as documentation that discloses key characteristics of an AI system: how data was curated, model design decisions, intended use and trade-offs. This improved understanding can play a valuable role in the risk management of AI systems.

“Factsheets help prevent overgeneralization and unintended use of AI services by solidly grounding them with metrics and usage scenarios” (Arnold et al, 2019)

In addition, the emerging regulatory guidance for AI in Pharmacovigilance (Huysentruyt et al, 2021) and the valuable resources from the AboutML initiative from the partnership on AI also helped us formulate our version which currently outlines:

Business problem

Intended use (target domain, inputs, operational envelope)

Data curation and labeling protocol

Training data characteristics

Description of machine learning models and inference pipeline

Performance metrics and experimental results

We will continue updating and extending fact sheets for MLM-AI as the platform evolves, and we would love to hear your feedback.

About biologit MLM-AI

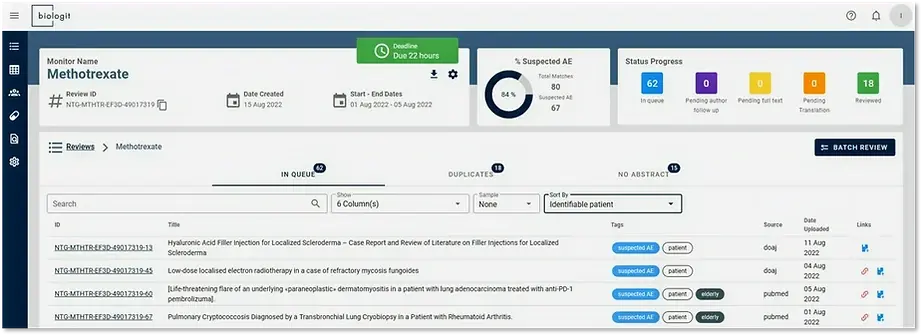

biologit MLM-AI is a complete literature monitoring solution built for safety surveillance teams.

Its flexible workflow, unified scientific database, and unique AI productivity features deliver fast, inexpensive, and fully traceable results for any screening needs in pharmacovigilance, medical device and veterinary safety surveillance.

See also

References

(Arnold et al, 2019) - Arnold, Matthew, et al. "FactSheets: Increasing trust in AI services through supplier's declarations of conformity." IBM Journal of Research and Development 63.4/5 (2019): 6-1. [ArXiv]

(Huysentruyt et al, 2021) - Huysentruyt K, et al. Validating Intelligent Automation Systems in Pharmacovigilance: Insights from Good Manufacturing Practices. Drug Saf. 2021 Mar;44(3):261-272. [doi]

Comments